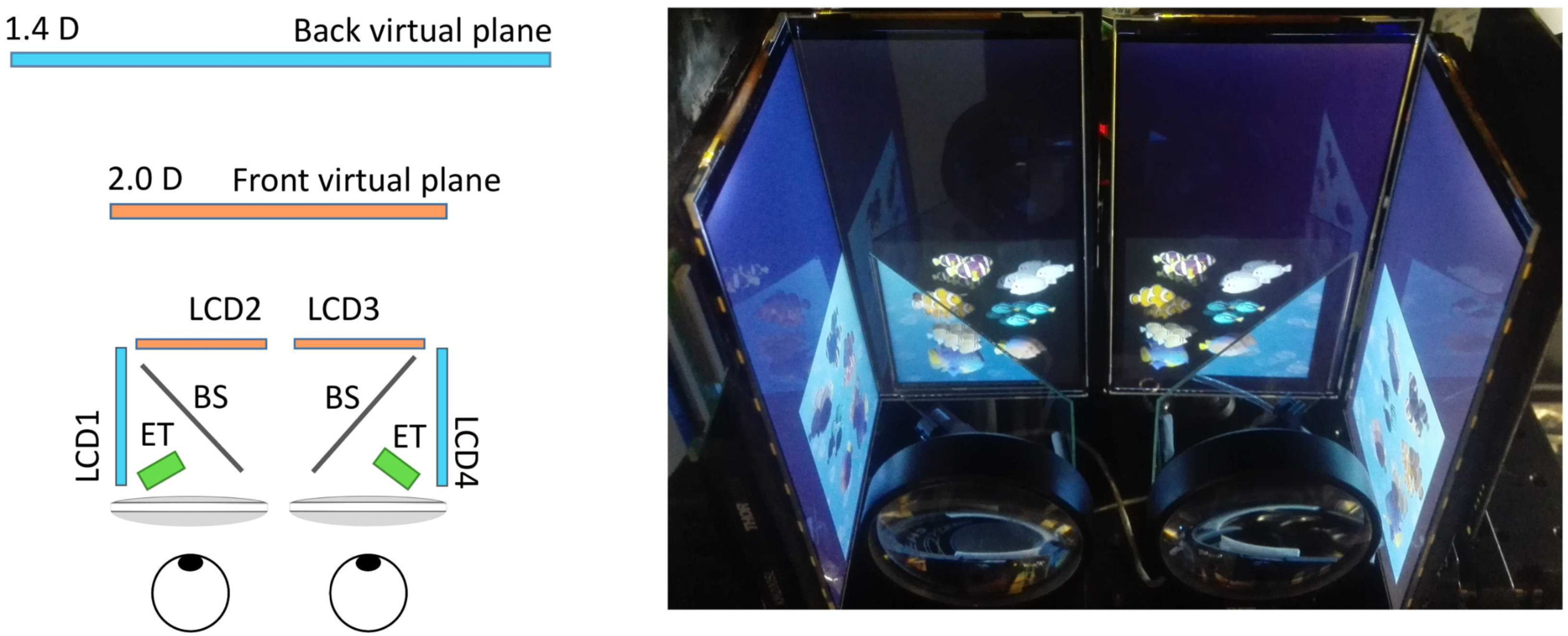

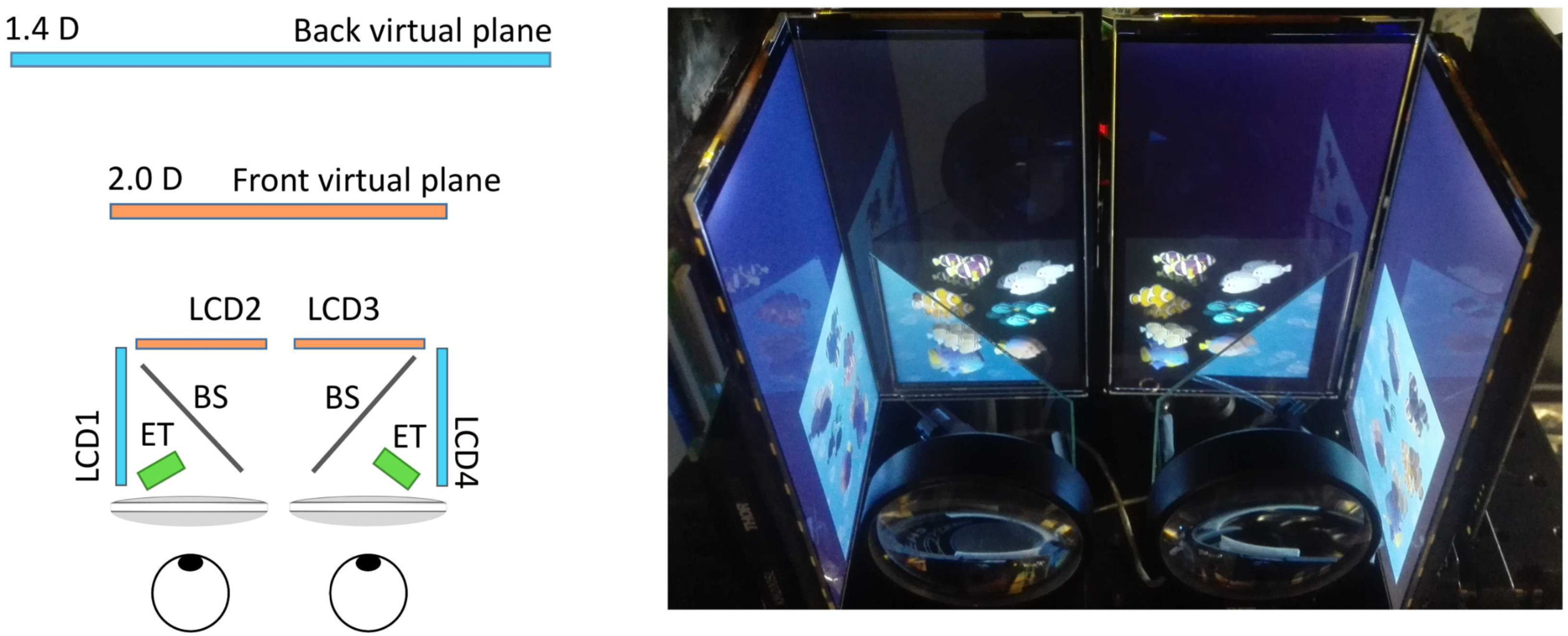

Multi-focal plane and multi-layered light-field displays are promising solutions for addressing all visual cues observed in the real world. Unfortunately, these devices usually require expensive optimizations to compute a suitable decomposition of the input light field or focal stack to drive individual display layers. Although these methods provide near-correct image reconstruction, a significant computational cost prevents real-time applications. A simple alternative is a linear blending strategy which decomposes a single 2D image using depth information. This method provides real-time performance, but it generates inaccurate results at occlusion boundaries and on glossy surfaces. This paper proposes a perception-based hybrid decomposition technique which combines the advantages of the above strategies and achieves both real-time performance and high-fidelity results. The fundamental idea is to apply expensive optimizations only in regions where it is perceptually superior, e.g., depth discontinuities at the fovea, and fall back to less costly linear blending otherwise. We present a complete, perception-informed analysis and model that locally determine which of the two strategies should be applied. The prediction is later utilized by our new synthesis method which performs the image decomposition. The results are analyzed and validated in user experiments on a custom multi-plane display.

MPI Project homepage

Paper (preprint)

Supplemental document

Video (137MB, mp4)

Imprint

/ Data Protection