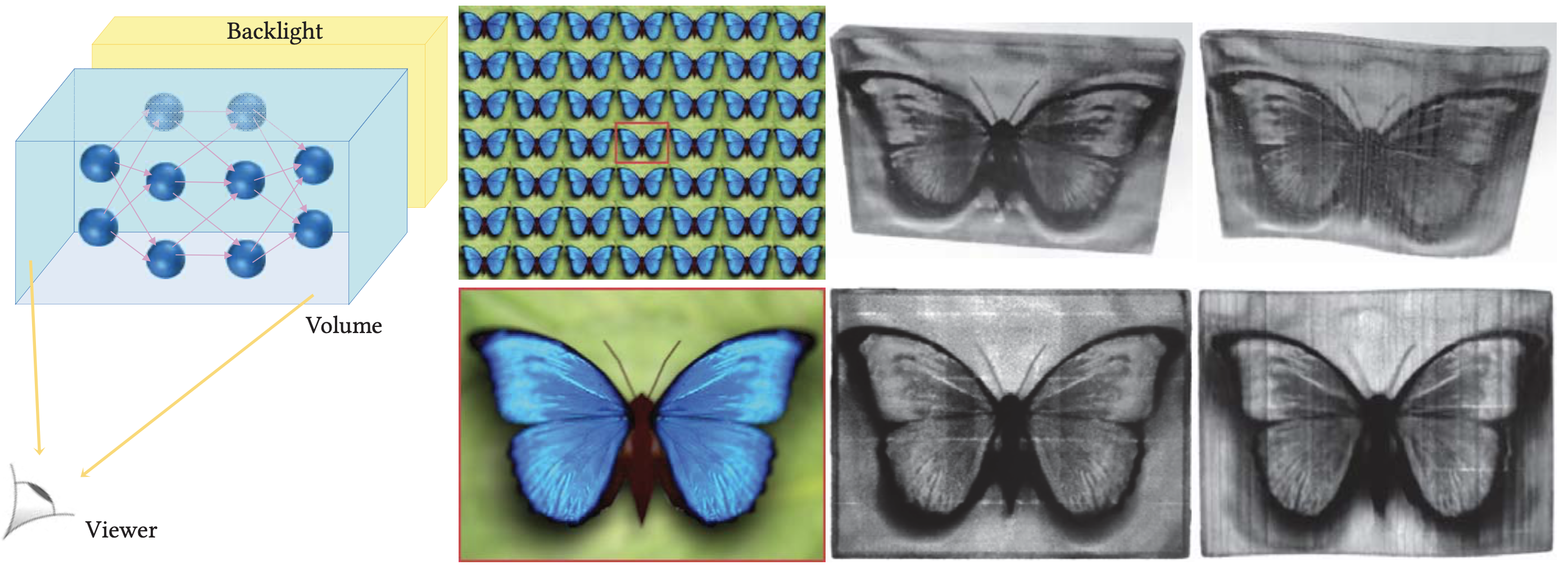

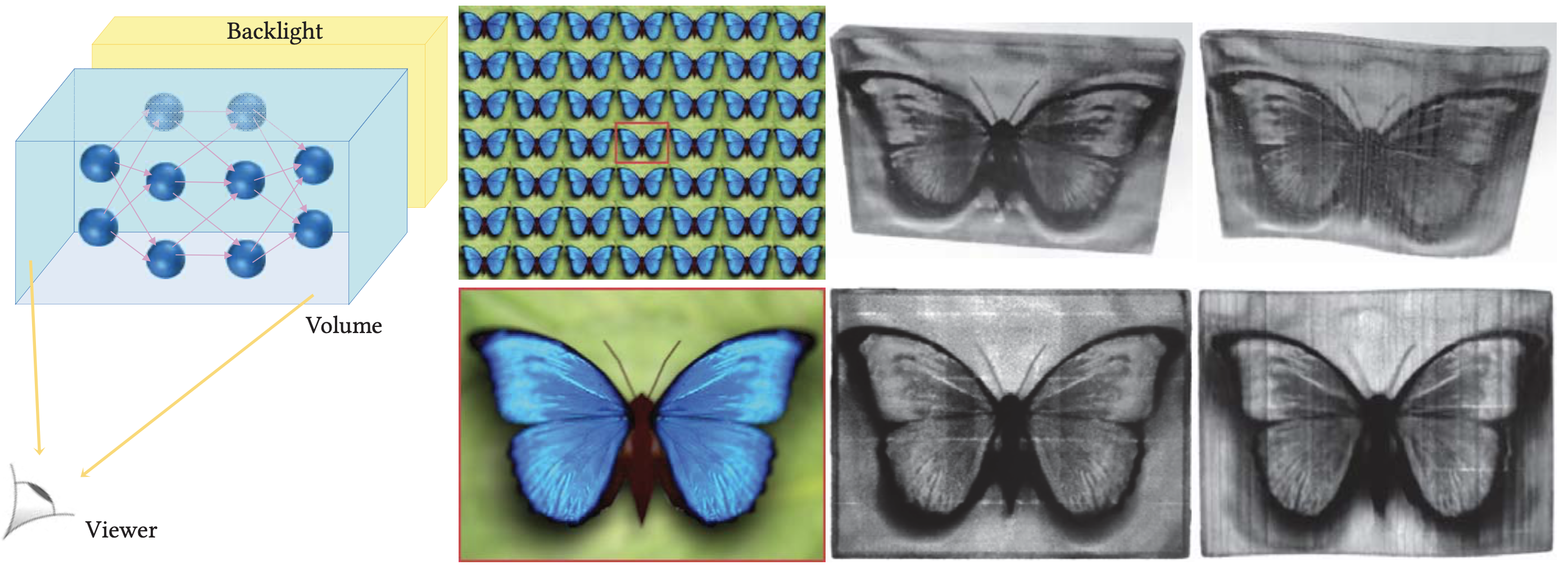

Modern 3D printers are capable of printing large-size light-field displays at high-resolutions. However, optimizing such displays in full 3D volume for a given light-field imagery is still a challenging task. Existing light field displays optimize over relatively small resolutions using a few co-planar layers in a 2.5D fashion to keep the problem tractable. In this paper, we propose a novel end-to-end optimization approach that encodes input light field imagery as a continuous-space implicit representation in a neural network. This allows fabricating high-resolution, attenuation-based volumetric displays that exhibit the target light fields. In addition, we incorporate the physical constraints of the material to the optimization such that the result can be printed in practice. Our simulation experiments demonstrate that our approach brings significant visual quality improvement compared to the multilayer and uniform grid-based approaches. We validate our simulations with fabricated prototypes and demonstrate that our pipeline is flexible enough to allow fabrications of both planar and non-planar displays.

Thanks to all the anonymous reviewers for shaping the final version of the paper.

The Author(s) / ACM. This is the author's version of the work. It is posted here for your personal use. Not for redistribution. The definitive Version of Record is available at doi.acm.org.

Imprint

/ Data Protection