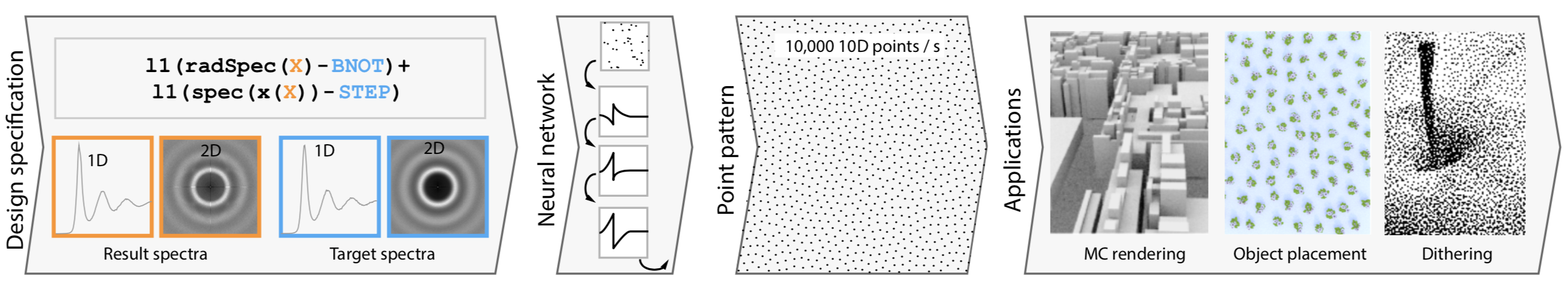

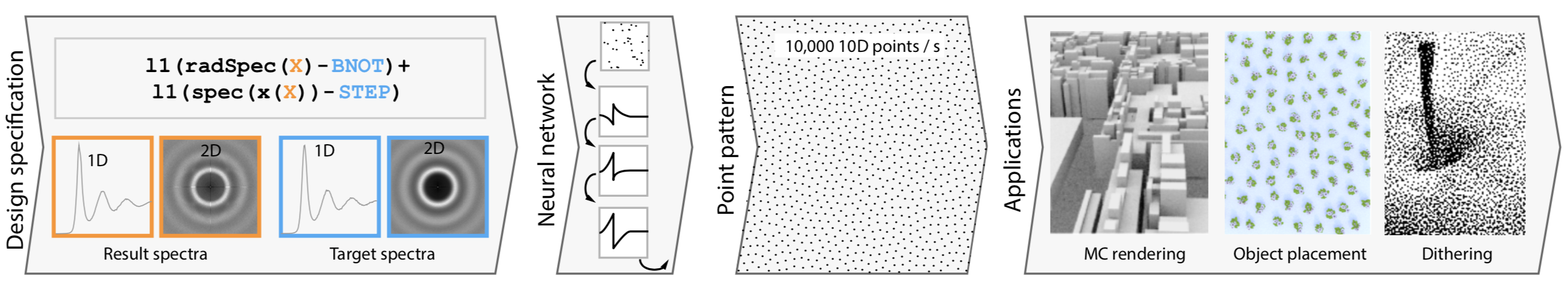

Designing point patterns with desired properties can require substantial effort, both in hand-crafting coding and mathematical derivation. Retaining these properties in multiple dimensions or for a substantial number of points can be challenging and computationally expensive. Tackling those two issues, we suggest to automatically generate scalable point patterns from design goals using deep learning. We phrase pattern generation as a deep composition of weighted distance-based unstructured filters. Deep point pattern design means to optimize over the space of all such compositions according toa user-provided point correlation loss, a small program which measures a pattern’s fidelity in respect to its spatial or spectral statistics, linear or non-linear(e. g., radial) projections, or any arbitrary combination thereof. Our analysis shows that we can emulate a large set of existing patterns (blue, green, step, projective, stair, etc.-noise), generalize them to countless new combinations in a systematic way and leverage existing error estimation formulations to generate novel point patterns for a user-provided class of integrand functions. Our point patterns scale favorably to multiple dimensions and numbers of points: we demonstrate nearly 10k points in 10-D produced in one second on one GPU.

Paper

Supplemental document

Source code

Powerpoint Slides (78MB)

PDF Slides (20MB)

Fast Forward Video (100MB)

We would like to thank all the reviewers for their detailed and constructive feedback. This work was partly supported by the Fraunhofer and the Max Planck cooperation program within the framework of the German pact for research and innovation (PFI).

The Author(s) / ACM. This is the author's version of the work. It is posted here for your personal use. Not for redistribution. The definitive Version of Record is available at doi.acm.org.

Imprint

/ Data Protection